-

We are excited to announce that AI Gateway now supports real-time AI interactions with the new Realtime WebSockets API.

This new capability allows developers to establish persistent, low-latency connections between their applications and AI models, enabling natural, real-time conversational AI experiences, including speech-to-speech interactions.

The Realtime WebSockets API works with the OpenAI Realtime API ↗, Google Gemini Live API ↗, and supports real-time text and speech interactions with models from Cartesia ↗, and ElevenLabs ↗.

Here's how you can connect AI Gateway to OpenAI's Realtime API ↗ using WebSockets:

OpenAI Realtime API example import WebSocket from "ws";const url ="wss://gateway.ai.cloudflare.com/v1/<account_id>/<gateway>/openai?model=gpt-4o-realtime-preview-2024-12-17";const ws = new WebSocket(url, {headers: {"cf-aig-authorization": process.env.CLOUDFLARE_API_KEY,Authorization: "Bearer " + process.env.OPENAI_API_KEY,"OpenAI-Beta": "realtime=v1",},});ws.on("open", () => console.log("Connected to server."));ws.on("message", (message) => console.log(JSON.parse(message.toString())));ws.send(JSON.stringify({type: "response.create",response: { modalities: ["text"], instructions: "Tell me a joke" },}),);Get started by checking out the Realtime WebSockets API documentation.

-

Document conversion plays an important role when designing and developing AI applications and agents. Workers AI now provides the

toMarkdownutility method that developers can use to for quick, easy, and convenient conversion and summary of documents in multiple formats to Markdown language.You can call this new tool using a binding by calling

env.AI.toMarkdown()or the using the REST API endpoint.In this example, we fetch a PDF document and an image from R2 and feed them both to

env.AI.toMarkdown(). The result is a list of converted documents. Workers AI models are used automatically to detect and summarize the image.import { Env } from "./env";export default {async fetch(request: Request, env: Env, ctx: ExecutionContext) {// https://pub-979cb28270cc461d94bc8a169d8f389d.r2.dev/somatosensory.pdfconst pdf = await env.R2.get('somatosensory.pdf');// https://pub-979cb28270cc461d94bc8a169d8f389d.r2.dev/cat.jpegconst cat = await env.R2.get('cat.jpeg');return Response.json(await env.AI.toMarkdown([{name: "somatosensory.pdf",blob: new Blob([await pdf.arrayBuffer()], { type: "application/octet-stream" }),},{name: "cat.jpeg",blob: new Blob([await cat.arrayBuffer()], { type: "application/octet-stream" }),},]),);},};This is the result:

[{"name": "somatosensory.pdf","mimeType": "application/pdf","format": "markdown","tokens": 0,"data": "# somatosensory.pdf\n## Metadata\n- PDFFormatVersion=1.4\n- IsLinearized=false\n- IsAcroFormPresent=false\n- IsXFAPresent=false\n- IsCollectionPresent=false\n- IsSignaturesPresent=false\n- Producer=Prince 20150210 (www.princexml.com)\n- Title=Anatomy of the Somatosensory System\n\n## Contents\n### Page 1\nThis is a sample document to showcase..."},{"name": "cat.jpeg","mimeType": "image/jpeg","format": "markdown","tokens": 0,"data": "The image is a close-up photograph of Grumpy Cat, a cat with a distinctive grumpy expression and piercing blue eyes. The cat has a brown face with a white stripe down its nose, and its ears are pointed upright. Its fur is light brown and darker around the face, with a pink nose and mouth. The cat's eyes are blue and slanted downward, giving it a perpetually grumpy appearance. The background is blurred, but it appears to be a dark brown color. Overall, the image is a humorous and iconic representation of the popular internet meme character, Grumpy Cat. The cat's facial expression and posture convey a sense of displeasure or annoyance, making it a relatable and entertaining image for many people."}]See Markdown Conversion for more information on supported formats, REST API and pricing.

-

📝 We've renamed the Agents package to

agents!If you've already been building with the Agents SDK, you can update your dependencies to use the new package name, and replace references to

agents-sdkwithagents:Terminal window # Install the new packagenpm i agentsTerminal window # Remove the old (deprecated) packagenpm uninstall agents-sdk# Find instances of the old package name in your codebasegrep -r 'agents-sdk' .# Replace instances of the old package name with the new one# (or use find-replace in your editor)sed -i 's/agents-sdk/agents/g' $(grep -rl 'agents-sdk' .)All future updates will be pushed to the new

agentspackage, and the older package has been marked as deprecated.We've added a number of big new features to the Agents SDK over the past few weeks, including:

- You can now set

cors: truewhen usingrouteAgentRequestto return permissive default CORS headers to Agent responses. - The regular client now syncs state on the agent (just like the React version).

useAgentChatbug fixes for passing headers/credentials, includng properly clearing cache on unmount.- Experimental

/schedulemodule with a prompt/schema for adding scheduling to your app (with evals!). - Changed the internal

zodschema to be compatible with the limitations of Google's Gemini models by removing the discriminated union, allowing you to use Gemini models with the scheduling API.

We've also fixed a number of bugs with state synchronization and the React hooks.

// via https://github.com/cloudflare/agents/tree/main/examples/cross-domainexport default {async fetch(request, env) {return (// Set { cors: true } to enable CORS headers.(await routeAgentRequest(request, env, { cors: true })) ||new Response("Not found", { status: 404 }));},};// via https://github.com/cloudflare/agents/tree/main/examples/cross-domainexport default {async fetch(request: Request, env: Env) {return (// Set { cors: true } to enable CORS headers.(await routeAgentRequest(request, env, { cors: true })) ||new Response("Not found", { status: 404 }));},} satisfies ExportedHandler<Env>;We've added a new

@unstable_callable()decorator for defining methods that can be called directly from clients. This allows you call methods from within your client code: you can call methods (with arguments) and get native JavaScript objects back.// server.tsimport { unstable_callable, Agent } from "agents";export class Rpc extends Agent {// Use the decorator to define a callable method@unstable_callable({description: "rpc test",})async getHistory() {return this.sql`SELECT * FROM history ORDER BY created_at DESC LIMIT 10`;}}// server.tsimport { unstable_callable, Agent, type StreamingResponse } from "agents";import type { Env } from "../server";export class Rpc extends Agent<Env> {// Use the decorator to define a callable method@unstable_callable({description: "rpc test",})async getHistory() {return this.sql`SELECT * FROM history ORDER BY created_at DESC LIMIT 10`;}}We've fixed a number of small bugs in the

agents-starter↗ project — a real-time, chat-based example application with tool-calling & human-in-the-loop built using the Agents SDK. The starter has also been upgraded to use the latest wrangler v4 release.If you're new to Agents, you can install and run the

agents-starterproject in two commands:Terminal window # Install it$ npm create cloudflare@latest agents-starter -- --template="cloudflare/agents-starter"# Run it$ npm run startYou can use the starter as a template for your own Agents projects: open up

src/server.tsandsrc/client.tsxto see how the Agents SDK is used.We've heard your feedback on the Agents SDK documentation, and we're shipping more API reference material and usage examples, including:

- Expanded API reference documentation, covering the methods and properties exposed by the Agents SDK, as well as more usage examples.

- More Client API documentation that documents

useAgent,useAgentChatand the new@unstable_callableRPC decorator exposed by the SDK. - New documentation on how to call agents and (optionally) authenticate clients before they connect to your Agents.

Note that the Agents SDK is continually growing: the type definitions included in the SDK will always include the latest APIs exposed by the

agentspackage.If you're still wondering what Agents are, read our blog on building AI Agents on Cloudflare ↗ and/or visit the Agents documentation to learn more.

- You can now set

-

Radar has expanded its security insights, providing visibility into aggregate trends in authentication requests, including the detection of leaked credentials through leaked credentials detection scans.

We have now introduced the following endpoints:

summary: Retrieves summaries of HTTP authentication requests distribution across two different dimensions.timeseries_group: Retrieves timeseries data for HTTP authentication requests distribution across two different dimensions.

The following dimensions are available, displaying the distribution of HTTP authentication requests based on:

compromised: Credential status (clean vs. compromised).bot_class: Bot class (human vs. bot).

Dive deeper into leaked credential detection in this blog post ↗ and learn more about the expanded Radar security insights in our blog post ↗.

-

Workers AI is excited to add 4 new models to the catalog, including 2 brand new classes of models with a text-to-speech and reranker model. Introducing:

- @cf/baai/bge-m3 - a multi-lingual embeddings model that supports over 100 languages. It can also simultaneously perform dense retrieval, multi-vector retrieval, and sparse retrieval, with the ability to process inputs of different granularities.

- @cf/baai/bge-reranker-base - our first reranker model! Rerankers are a type of text classification model that takes a query and context, and outputs a similarity score between the two. When used in RAG systems, you can use a reranker after the initial vector search to find the most relevant documents to return to a user by reranking the outputs.

- @cf/openai/whisper-large-v3-turbo - a faster, more accurate speech-to-text model. This model was added earlier but is graduating out of beta with pricing included today.

- @cf/myshell-ai/melotts - our first text-to-speech model that allows users to generate an MP3 with voice audio from inputted text.

Pricing is available for each of these models on the Workers AI pricing page.

This docs update includes a few minor bug fixes to the model schema for llama-guard, llama-3.2-1b, which you can review on the product changelog.

Try it out and let us know what you think! Stay tuned for more models in the coming days.

-

You can now access bindings from anywhere in your Worker by importing the

envobject fromcloudflare:workers.Previously,

envcould only be accessed during a request. This meant that bindings could not be used in the top-level context of a Worker.Now, you can import

envand access bindings such as secrets or environment variables in the initial setup for your Worker:import { env } from "cloudflare:workers";import ApiClient from "example-api-client";// API_KEY and LOG_LEVEL now usable in top-level scopeconst apiClient = ApiClient.new({ apiKey: env.API_KEY });const LOG_LEVEL = env.LOG_LEVEL || "info";export default {fetch(req) {// you can use apiClient or LOG_LEVEL, configured before any request is handled},};Additionally,

envwas normally accessed as a argument to a Worker's entrypoint handler, such asfetch. This meant that if you needed to access a binding from a deeply nested function, you had to passenvas an argument through many functions to get it to the right spot. This could be cumbersome in complex codebases.Now, you can access the bindings from anywhere in your codebase without passing

envas an argument:// helpers.jsimport { env } from "cloudflare:workers";// env is *not* an argument to this functionexport async function getValue(key) {let prefix = env.KV_PREFIX;return await env.KV.get(`${prefix}-${key}`);}For more information, see documentation on accessing

env.

-

You can now retry your Cloudflare Pages and Workers builds directly from GitHub. No need to switch to the Cloudflare Dashboard for a simple retry!

Let’s say you push a commit, but your build fails due to a spurious error like a network timeout. Instead of going to the Cloudflare Dashboard to manually retry, you can now rerun the build with just a few clicks inside GitHub, keeping you inside your workflow.

For Pages and Workers projects connected to a GitHub repository:

- When a build fails, go to your GitHub repository or pull request

- Select the failed Check Run for the build

- Select "Details" on the Check Run

- Select "Rerun" to trigger a retry build for that commit

Learn more about Pages Builds and Workers Builds.

-

You can now debug your Workers tests with our Vitest integration by running the following command:

Terminal window vitest --inspect --no-file-parallelismAttach a debugger to the port 9229 and you can start stepping through your Workers tests. This is available with

@cloudflare/vitest-pool-workersv0.7.5 or later.Learn more in our documentation.

-

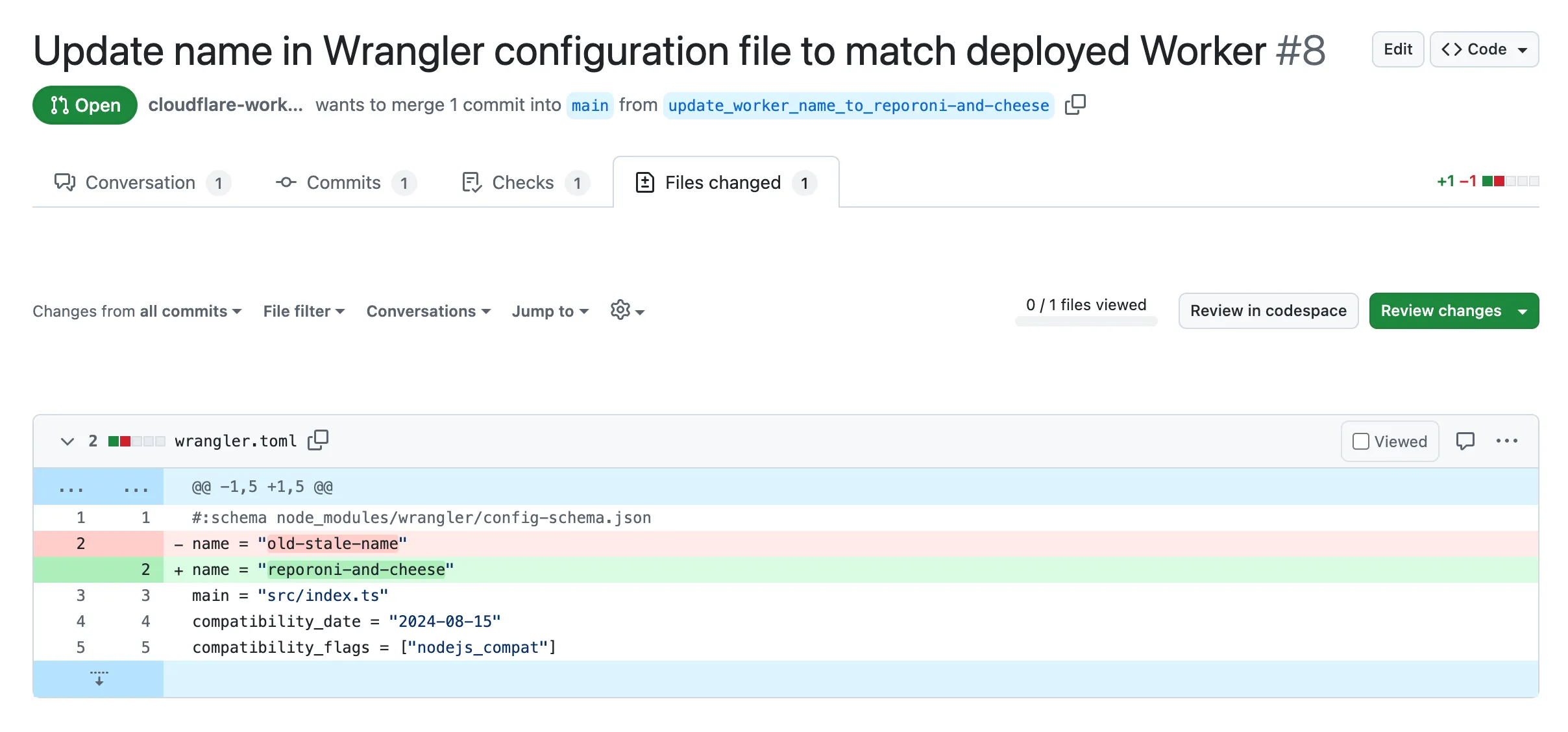

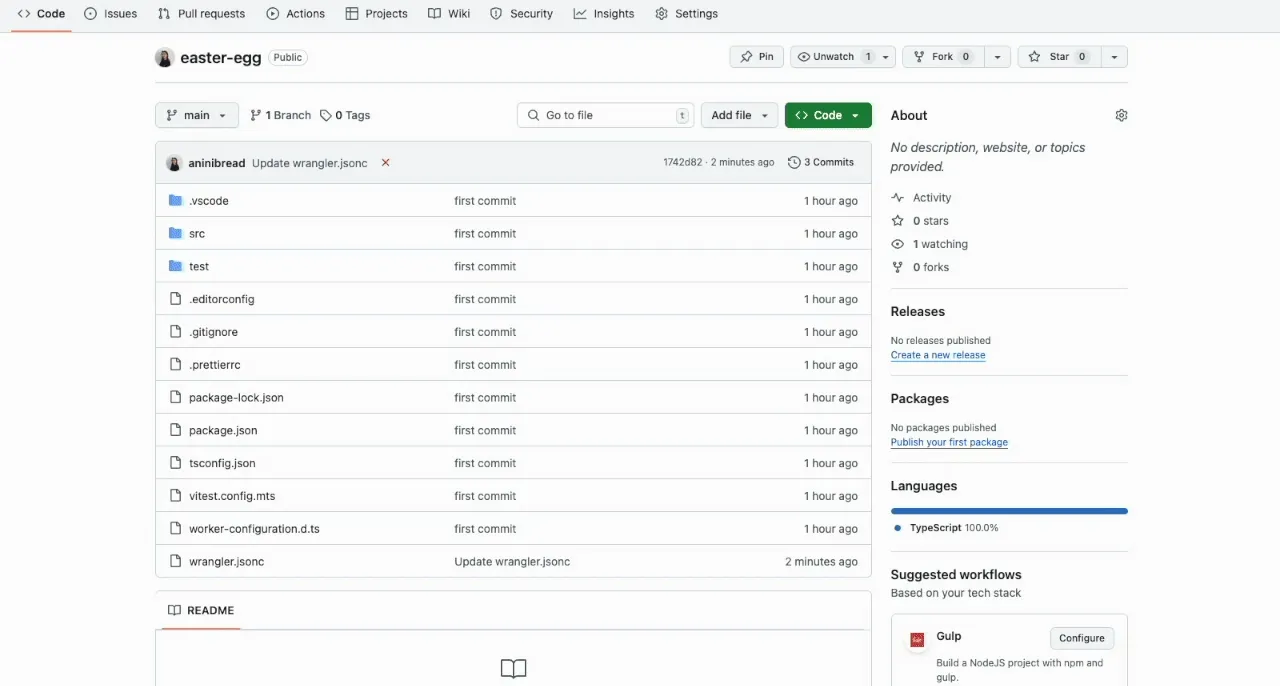

We've released the next major version of Wrangler, the CLI for Cloudflare Workers —

wrangler@4.0.0. Wrangler v4 is a major release focused on updates to underlying systems and dependencies, along with improvements to keep Wrangler commands consistent and clear.You can run the following command to install it in your projects:

Terminal window npm i wrangler@latestTerminal window pnpm add wrangler@latestTerminal window yarn add wrangler@latestUnlike previous major versions of Wrangler, which were foundational rewrites ↗ and rearchitectures ↗ — Version 4 of Wrangler includes a much smaller set of changes. If you use Wrangler today, your workflow is very unlikely to change.

A detailed migration guide is available and if you find a bug or hit a roadblock when upgrading to Wrangler v4, open an issue on the

cloudflare/workers-sdkrepository on GitHub ↗.Going forward, we'll continue supporting Wrangler v3 with bug fixes and security updates until Q1 2026, and with critical security updates until Q1 2027, at which point it will be out of support.

-

We’re removing some of the restrictions in Email Routing so that AI Agents and task automation can better handle email workflows, including how Workers can reply to incoming emails.

It's now possible to keep a threaded email conversation with an Email Worker script as long as:

- The incoming email has to have valid DMARC ↗.

- The email can only be replied to once in the same

EmailMessageevent. - The recipient in the reply must match the incoming sender.

- The outgoing sender domain must match the same domain that received the email.

- Every time an email passes through Email Routing or another MTA, an entry is added to the

Referenceslist. We stop accepting replies to emails with more than 100Referencesentries to prevent abuse or accidental loops.

Here's an example of a Worker responding to Emails using a Workers AI model:

AI model responding to emails import PostalMime from "postal-mime";import {createMimeMessage} from "mimetext"import { EmailMessage } from "cloudflare:email";export default {async email(message, env, ctx) {const email = await PostalMime.parse(message.raw)const res = await env.AI.run('@cf/meta/llama-2-7b-chat-fp16', {messages: [{role: "user",content: email.text ?? ''}]})// message-id is generated by mimetextconst response = createMimeMessage()response.setHeader("In-Reply-To", message.headers.get("Message-ID")!);response.setSender("agent@example.com");response.setRecipient(message.from);response.setSubject("Llama response");response.addMessage({contentType: 'text/plain',data: res instanceof ReadableStream ? await new Response(res).text() : res.response!})const replyMessage = new EmailMessage("<email>", message.from, response.asRaw());await message.reply(replyMessage)}} satisfies ExportedHandler<Env>;See Reply to emails from Workers for more information.

Was this helpful?

- Resources

- API

- New to Cloudflare?

- Products

- Sponsorships

- Open Source

- Support

- Help Center

- System Status

- Compliance

- GDPR

- Company

- cloudflare.com

- Our team

- Careers

- 2025 Cloudflare, Inc.

- Privacy Policy

- Terms of Use

- Report Security Issues

- Trademark